GM and welcome to Milk Road AI PRO, the newsletter that believes that the AI boom is real, but the power to run it is non-existent.

My name is Vincent, and every two weeks I’ll be breaking down the biggest structural trends shaping the AI economy and what they mean for your portfolio.

The first two editions are FREE, and you'll need to sign up for Milk Road AI PRO to catch them after that.

A little bit about me:

I am a strategy consultant by day, who helps senior executives around the world grow their revenue and reduce costs.

But by night, I'm a retail investor just like you, who’s spent the last 5 years deep in crypto and the AI trade.

I'm here to help you make sense of all this, and avoid the vaporware lurking in the shadows.

To kick us off, we’re looking at how the AI power + infrastructure crunch could slam the brakes on the whole AI boom, and what that means for your portfolio.

Why is this topic important now?

For the last few years, the AI trade was easy: buy Nvidia, buy Microsoft, maybe sprinkle in some Palantir and call it a day. That was inning one.

Now we’re moving into inning two: AI is leaking into the real economy. Think data centers, grids, turbines, transformers, land, concrete.

If you’re an investor, the question isn’t just who wins AI, but rather what could quietly cap the upside.

Everyone’s staring at chips. Nvidia GPUs or Google TPUs?

I’d argue the real bottleneck isn’t silicon; it’s steel, copper, and electrons. It’s the physical infrastructure and electricity needed to power this whole thing.

Therefore, in this report we’ll unpack:

How AI data center electricity demand will 3–4x this decade

Why 2024–2025 looked calm, and why that calm ends starting in 2026

The 6 “pressure valves” that will delay - but not solve - the crunch

Which energy sources can scale fast enough (hint: it ain't nuclear)

How to position your portfolio for the picks-and-shovels opportunity

AI TURNS DATA CENTERS INTO A NEW INDUSTRIAL SECTOR

Money going into data centers (DCs) has almost doubled since 2022, hitting ~$500bn in 2024.

McKinsey thinks we’ll need $5.2T to reach 156GW capacity, roughly what the U.S. government spent fighting the COVID crisis.

And after going through the Hyperscalers (Microsoft, Amazon, Google) Q3 2025 earnings – the message was clear:

AI related CapEx will not slow down.

Whatever the exact final number will be, the takeaway is that there will be massive investment directed at AI DCs buildouts.

Now if we have a lot of DCs, how much energy do they require?

A “normal” AI DCs of 100MW = power for ~100,000 homes.

The mega-campuses being built now will use up to 10-20x that.

By 2030, global DCs power use could hit 945-1260 TWh (Terrawatt-hour), up from 415 TWh in 2024 (a 3-4x gain in demand over a 6yr period).

That’s:

More than Japan’s total electricity use today.

More than U.S. aluminum, steel, cement, chemicals and all other energy-intensive goods combined.

Enough that U.S. DCs alone could exceed 10% of peak demand.

Aka so much energy, that we do not really know how the grid can handle the demand or where the power will come from!

2024–2025: The last “easy mode” years

So far, AI DCs power demand hasn’t broken the grid - at least, not yet. Why is that?

Well, this is mainly because of 3 reasons:

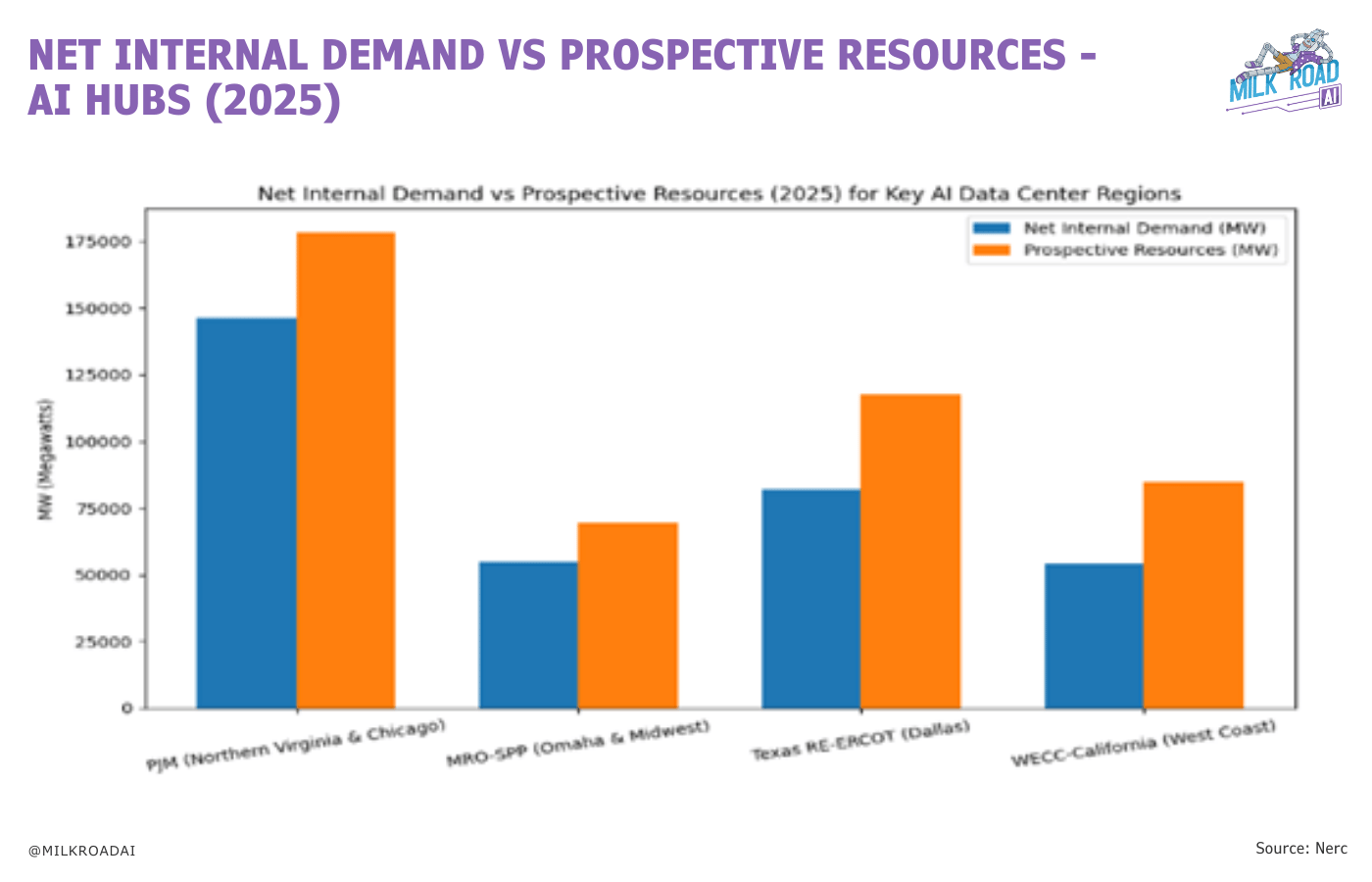

1/ There is slack power in the system (as you can see in the chart below).

Most DCs hubs, power plants and grids weren’t running at 100% before AI showed up.

Early AI DCs are just eating up that slack, ie., tapping off-peak capacity and underused plants.

That also explains why prices and stress on the grid have stayed pretty normal, for now.

2/ Wasted renewables finally found a buyer.

Wind and solar often produce more than the grid can absorb, so a lot of that power used to be thrown away.

Big Tech is now plugging AI loads into that oversupply and soaking up this free green energy.

3/ Hyperscalers are building brownfield (using existing sites), not greenfield (building completely new sites).

They’re stacking new servers onto existing campuses with existing grid connections, which avoids big, slow, expensive grid upgrades, for now.

2026-2028: When buffers run out

Those buffers will be highly squeezed during 2026!

Let me explain…

PJM (U.S. largest regional transmission organization), which covers North Virginia and Chicago, is already near its physical limits.

In PJM’s latest auction, DCs demand added ~12 GW of load, pushing the capacity to its price ceiling and leaving no spare generation margin for 2026/27.

The CSIS (Center for Strategic and International Studies) puts it plainly: Every new GW of DCs capacity needs a new GW of power capacity (aka greenfield assets).

And the NERC (North American Electric Reliability Corporation) warns that well over half of North America is at elevated blackout risks between 2025-2029, as AI driven electricity demand explodes faster than the grid can expand.

In short: Future DCs buildouts may be at risk.

The IEA agrees: they project that ~1/5 of new DCs planned by 2030 could grind to a halt thanks to energy bottlenecks.

Especially in the U.S., where the issue continues to mount. Here is why…

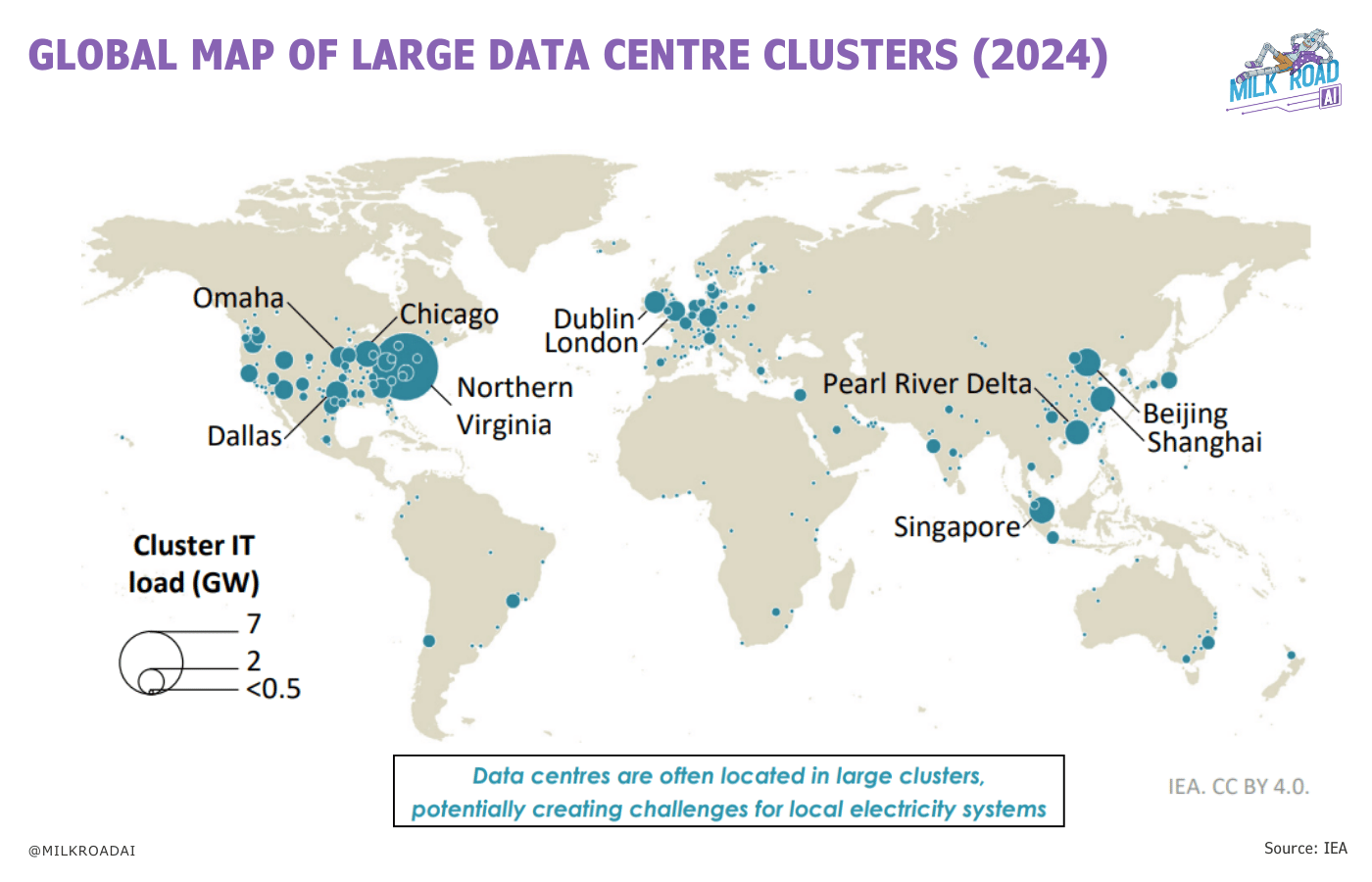

DCs tend to be geographically concentrated and located around cities.

In the U.S., 50% of DCs under development are in pre-existing large clusters, potentially raising risks of local bottlenecks.

What is actually scarce?

In the short to mid-term, the constraint is not pure power, but infrastructure.

We lack the infrastructure to move power through the grid and connect new loads to the system.

Let me unpack this…

The U.S. is facing a structural shortage of transformers and switchgear, as every big DCs and new energy plant needs them.

Transformers = the gear that steps voltage up or down, so power travels efficiently.

Switchgear = the grid’s circuit breaker and traffic controller.

OEMs report multi-year order books and rising prices for large transformer units and switchgear. Lead times already ballooning to ~3 years.

Even though manufacturing companies are increasing production volume, scarcity will constrain DCs hook-ups from 2026 onwards.

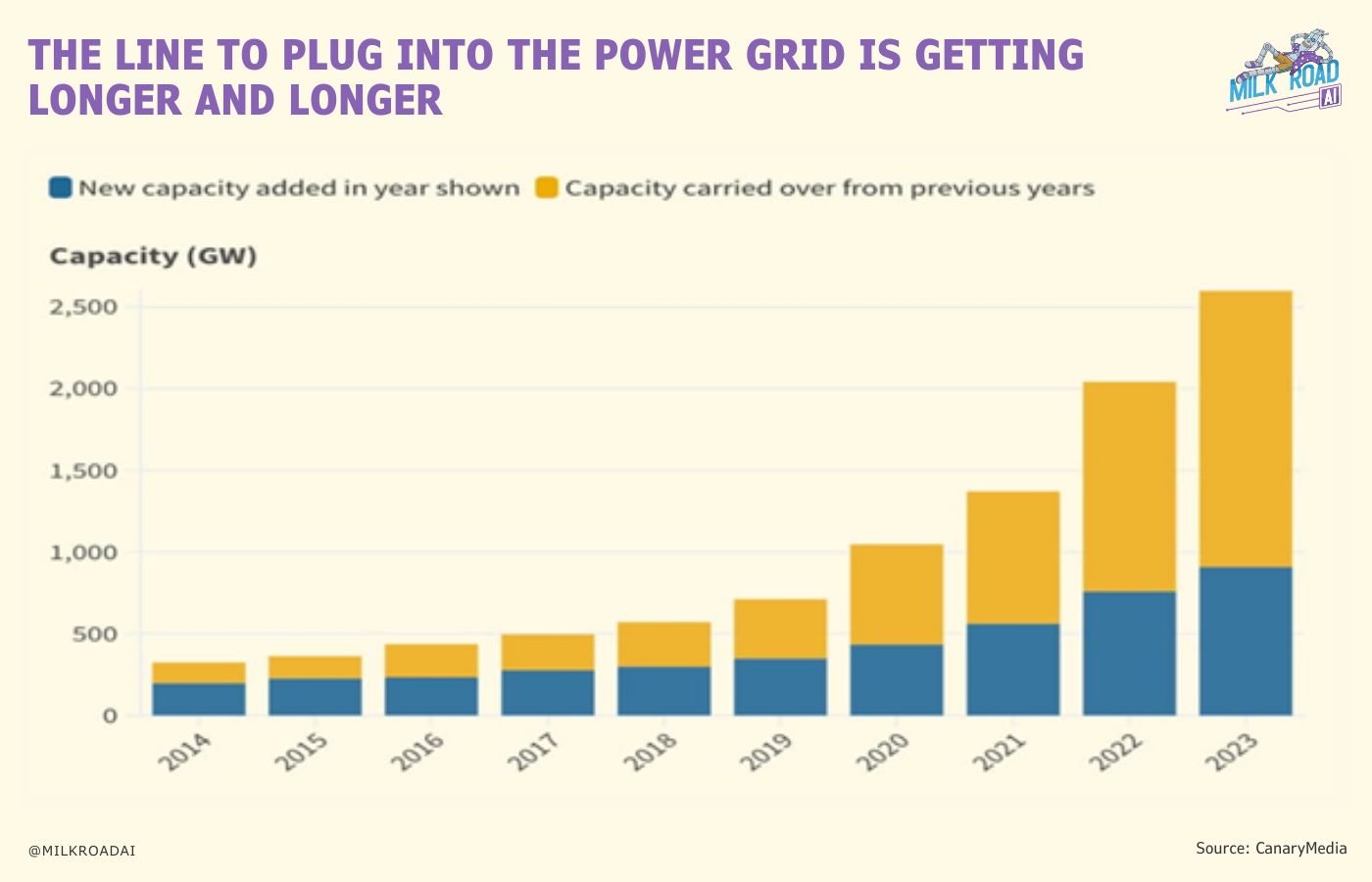

We’re not just struggling to move power through the grid, we actually can’t even connect the new power supply fast enough.

Every new energy plant (e.g. wind or solar farm) must clear lengthy interconnection studies. Projects now spend a median 4–5 years from request to energization.

The DOE (Department of Energy) has already asked the FERC (Federal Energy Regulatory Commission) to overhaul the process as current rules are far too slow.

Additionally, local permitting issues add another choke point to this process.

AI-based DCs packed areas such as Northern Virginia, Atlanta, Phoenix or Las Vegas face mounting community pushback, leading to an additional 1–3 years of delay.

So, to sum it up, the power is there, but it can’t reach the AI loads that need it. The transformers, paperwork and interconnection queues are the core barriers right now.

How we muddle through 2026-2028

The issues described will hit us hard. We won’t fix them in 2026–2028, only soften the blow.

To do that, we’ll need to pull 6 rabbits out of the hat.

Rabbit 1: Squeeze more out of what we already have

We will keep existing gas, coal and nuclear plants running longer instead of shutting them down.

Also, the grid must work harder with upgraded lines and equipment so it can safely carry more power.

Rabbit 2: Select who gets power first

Grid operators are likely to move the reliable players to the front line (e.g., Hyperscalers or projects with storage).

Smaller or riskier projects will get pushed back or dropped entirely.

Some DCs may strike direct deals with existing power plants to get electricity privately and skip the queue that forms around the public grid.

Rabbit 3: Use batteries

Batteries are the grid’s shock absorbers.

At DCs they charge during quiet hours and discharge during peaks, so big sites pull less from the grid when everyone else is using power.

Out in the field, they soak up excess solar and wind and release it later when it’s actually needed.

During short outages or overloads, they step in as an instant backup, keeping the system stable and running smoothly.

Rabbit 4: Bend the AI demand curve

Big tech will learn to live with the power limitations. New DCs will no longer switch on at full power but grow in stages as the grid catches up.

Energy intensive jobs will move to off-peak hours, when renewables are plentiful. Granted, this is a rather theoretical argument, as AI-DCs require 24/7 power.

Some projects will therefore shift to power-rich regions (e.g., Norway or the Middle East).

Rabbit 5: Policy response

Regulators will have to open fast lanes for upgrades and critical projects to jump the queue.

Also, big energy users may get paid to ease off during crunch hours (e.g., Bitcoin miners), turning demand itself into a buffer.

Rabbit 6: Legacy Megawatts to AI

Old industrial sites (think: miners, smelters, chemical plants or steel mills) already have what AI desperately needs:

Grid connections, land and cooling.

Many of these businesses are structurally weaker than AI, but their power access may turn out to be more valuable than their operational business.

Over time, the market may start repurposing and repricing these assets, not for what they produce today, but for the AI compute they could power tomorrow.

We are seeing this already, mainly with Bitcoin Miners pivoting their business model towards powering AI infrastructure.

Sidenote: We published a full deep dive this weekend in Milk Road Crypto PRO, breaking down which operators are best positioned and which we are avoiding. And yes, Martin broke down every single relevant stock. Check it out here.

Breaking the 6 rabbits down into one line:

We’re not solving the power crunch, just slowing down the collision to avoid a collapse of the grid.

THE FUTURE ENERGY STACK

Once you see how hard the grid is creaking, it becomes obvious to ask what power sources will actually supply the new energy required?

Spoiler: most of the usual sources discussed on X/Twitter are either too slow, in the wrong place or already sold out for this decade.

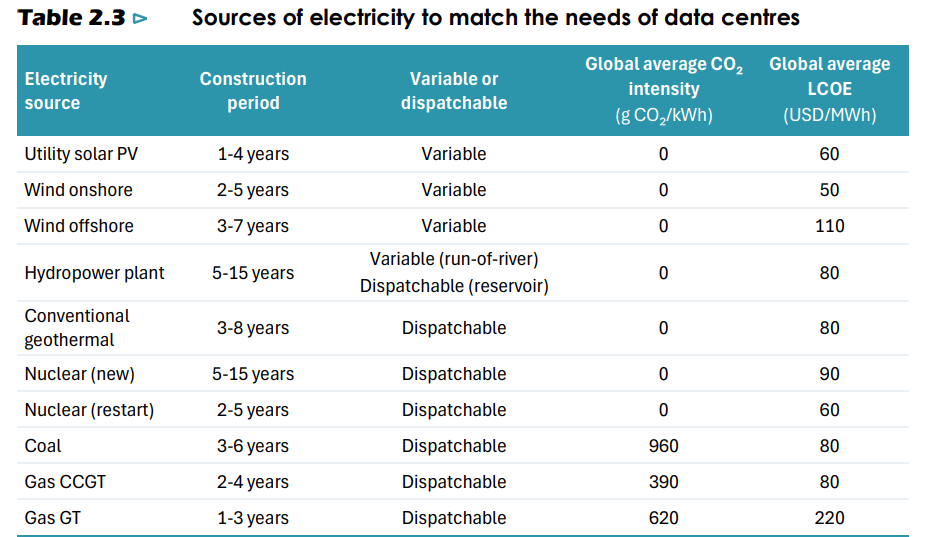

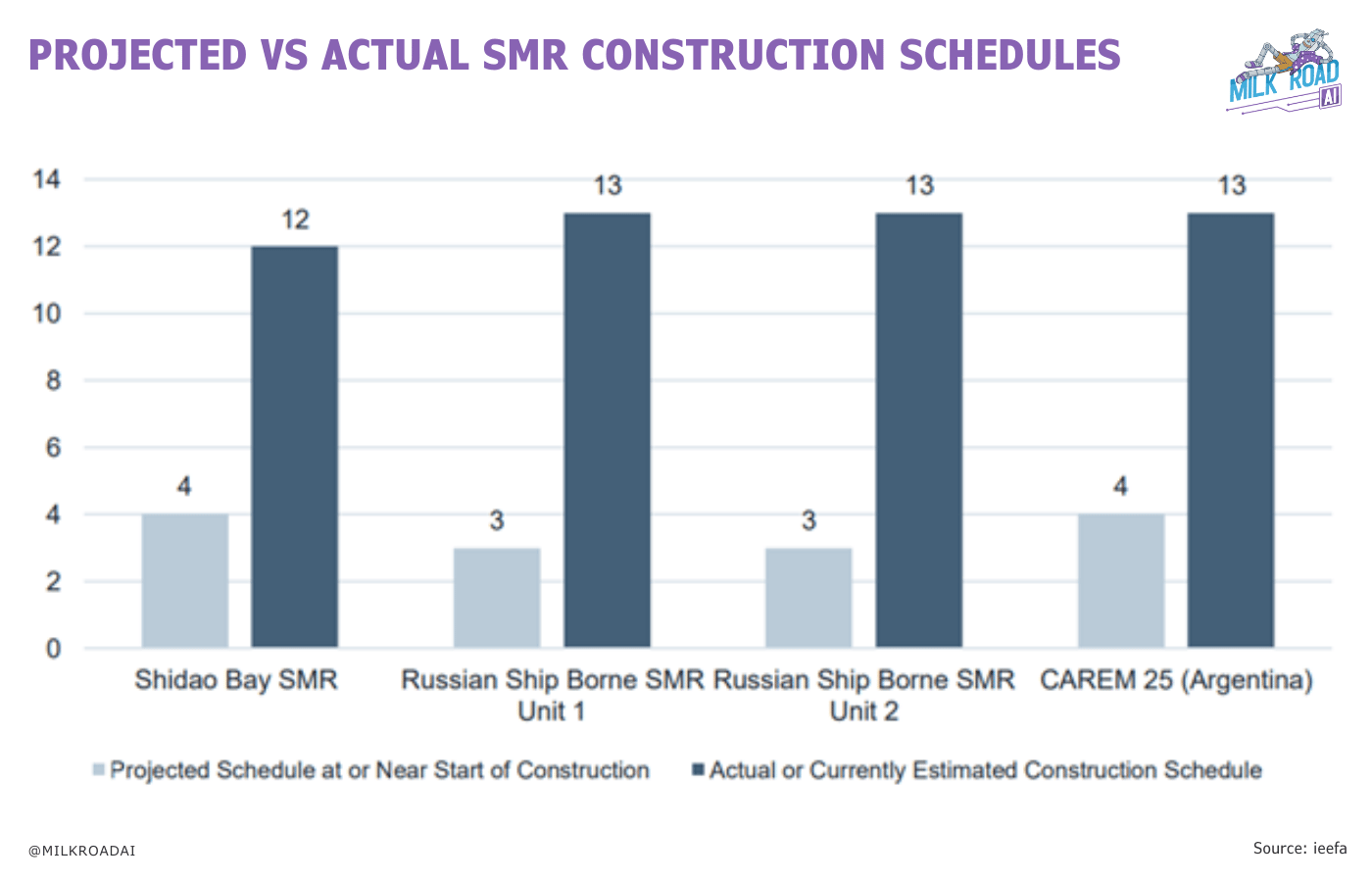

1. Nuclear: Too late for this party?

The technology looks perfect on paper. 24/7 generation, no carbon emissions and very dense power.

The problem?

Even in the best case, they need around 6-8 years to go from planning to actually producing power. Roughly half for approvals and half for construction.

For early-stage projects (which most, if not all currently are), 10-15 years is more realistic once you count site permits, financing, and reviews.

So, while SMRs may strengthen the grid in the 2030s, they won’t arrive fast enough to help with the AI power crunch in this decade.

2. Gas: Fast in theory, sold out in practice

Right now, every Hyperscaler loves on-site gas-fired power generation.

Natural gas is abundant, provides a very reliable and cheap power supply, and is fast to spin up.

The problem?

Gas turbines are basically sold out into 2027-2028, and likely beyond that.

This comes as a result of massive orders for new gas turbines from utilities and project developers over the last 2 years.

This sudden increase in orders has hit the global gas turbine supply chain, which has seen limited investment in manufacturing capacity due to years of stagnant demand.

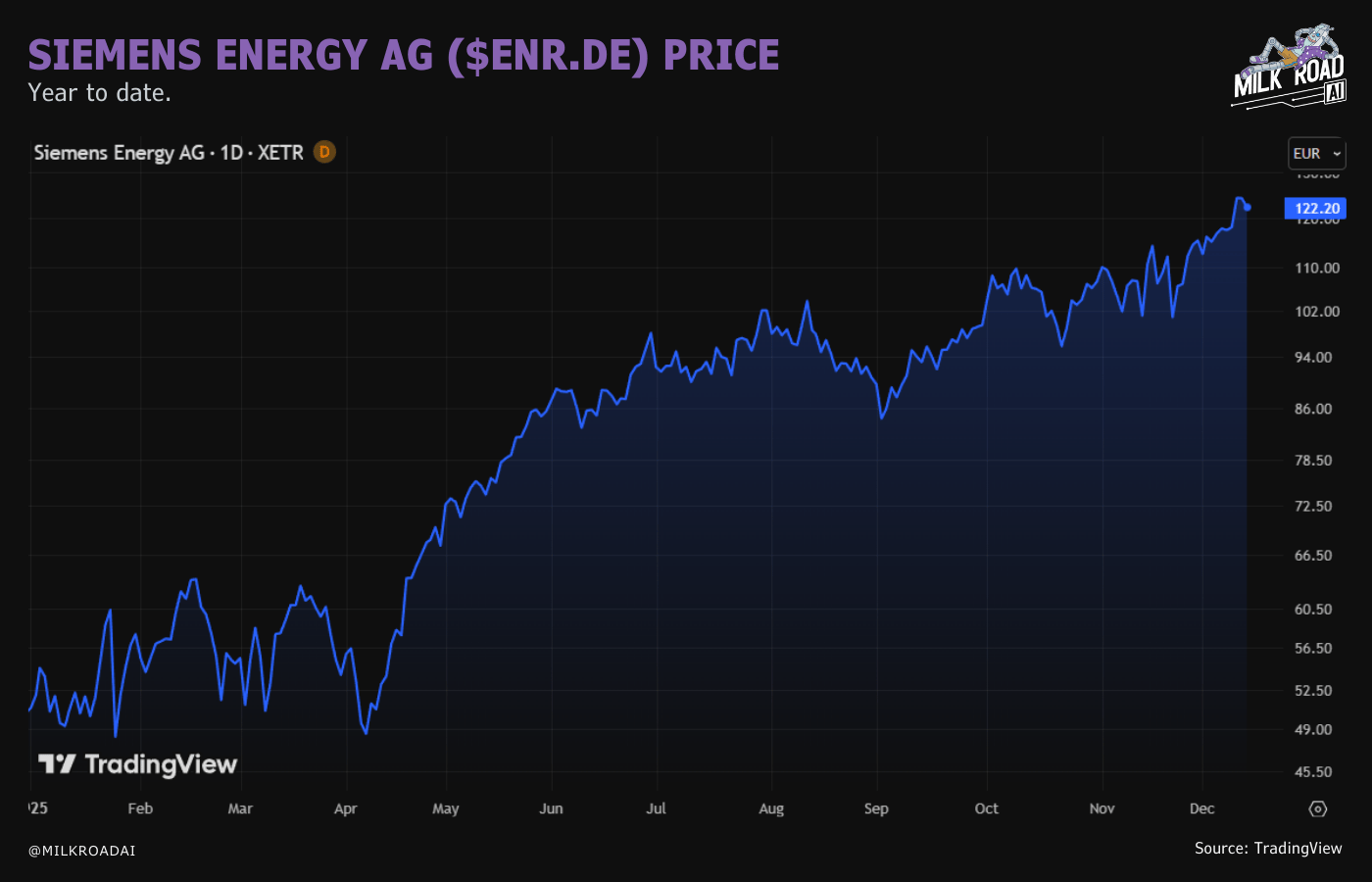

GE Vernova, Siemens Energy and Mitsubishi Power are the 3 big OEMs in the sector. Together they supply about 2/3 of global gas turbines.

They all reported massive order backlogs and waiting times for new turbines of up to 3 years.

So, while gas will definitely play a role, the turbine bottlenecks means it can’t soak up enough of the energy demand in this decade.

3. Hydro: Great baseload, painfully slow to build

Massive hydro dams are fantastic, offering cheap, steady, low-carbon power supply.

The problem?

From first study to first megawatt, it takes 10-15 yrs.

Operators are fighting multi-agency licensing, environmental concerns, land issues, and then the actual dam + reservoir construction.

So, while hydro is a great grid backbone story, it is not a fast-response solution to the AI energy crunch hitting the next 3-5 years.

4. Wind: Strong resource, wrong place and politics

Offshore wind has long construction cycles (3-7 years) and higher delivered costs thanks to complex logistics.

Onshore wind is much cheaper and faster to build (2-5 years).

The problem?

If we take a deeper look at the U.S., the cheapest wind is in the Midwest and the Great Plains. Not where the biggest AI DCs clusters are.

Building the transmission to move that power is exactly what’s already stuck in queues.

On top of that, onshore wind faces heavy local and political pushback. Tall turbines, big land footprints and visual impacts.

~80% of wind developers name local land-use regulations as the main barrier to new projects.

Wind will keep growing, but it’s mostly in the wrong places and moving too slowly to be the primary fix for the 2020s AI power squeeze.

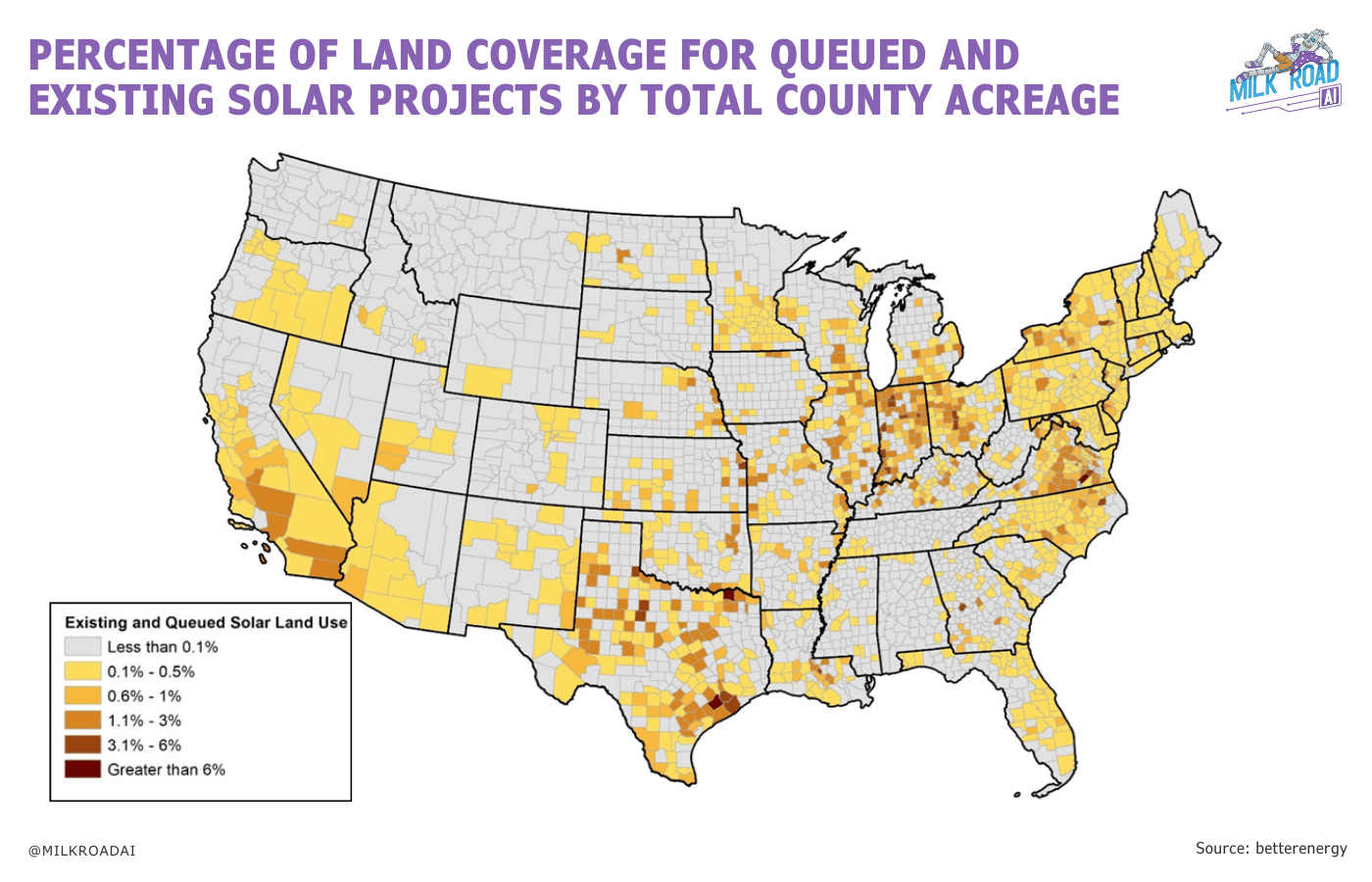

5. Solar + batteries: The only combo saving us this decade

After ruling out the usual suspects, we’re left with what Elon Musk refers to as “a giant fusion reactor in the sky”, aka solar energy, aka the sun.

Let’s get one thing out of the way: Trump in office ≠ no renewables.

The U.S. is actually adding solar at scale. In 2025, ~33 GW will be added to the system.

The cool thing about solar isn’t that it’s clean. It’s that it can be built in a uniquely short period of time and where the AI load requires it to be.

The average utility-scale solar projects take ~1-4 yrs to develop. Which is extremely fast in comparison to the other sources.

Also, new solar capacity is clustering in Texas, Virginia, Ohio, Pennsylvania, California.

Basically, the same states where Hyperscalers are building their biggest DCs campuses.

The problem?

The sun is not always shining, and DCs require 24/7 energy supply (aka intermittency issue).

Yes, solar only produces in daylight, with a midday peak and zero output at night.

Also, at times clouds ruin the party. This is referred to as intra-hour variability which must be balanced as well (mostly by the grid).

Additionally, we have winter and summer time. In many U.S. regions, winter solar output is 30-50% lower than in summer with the same capacity.

The solution?

Massive batteries that help us out, again.

Those batteries store excess energy (commonly produced during midday peaks) and release it when DCs demand ramps up.

Those batteries are also very smart. They react within milliseconds to sudden drops or surges in power (e.g., when a little cloud places itself in front of the sun).

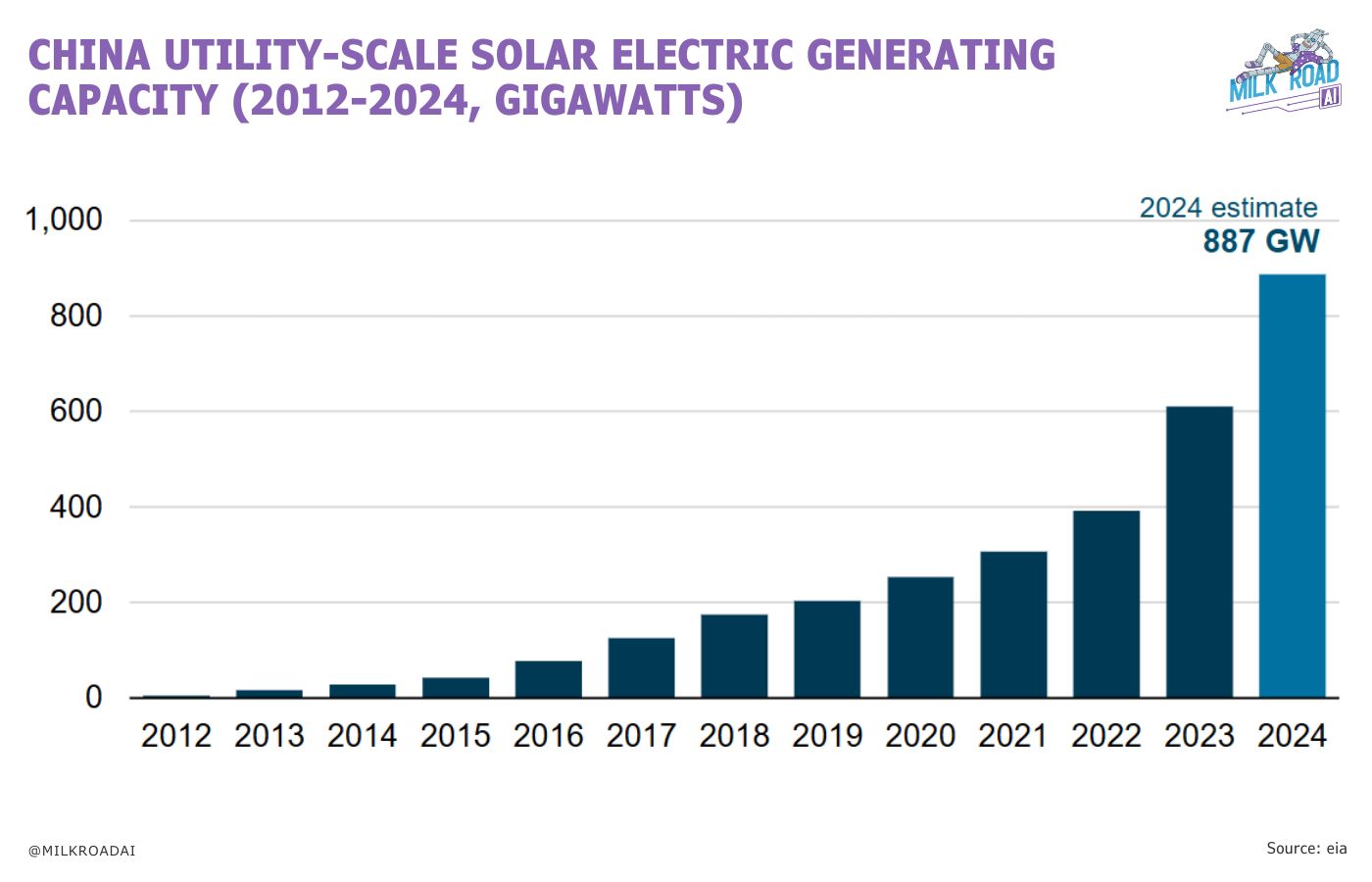

Finally, China has been massively subsidizing solar buildout for years, effectively betting that this is the long-term backbone of cheap power.

SO HOW CAN WE TRADE THIS?

Before we jump into the winners of this thesis, let’s discuss which parts of the AI sector may face some headwinds.

Hyperscalers & AI infra clouds (Google, Amazon, Microsoft, Oracle, & others.)

They are deploying tens of billions into AI CapEx because demand looks massive.

To finance that, they increasingly introduce debt to fund those investments.

However, the real-world build-out of power, grid connections and DCs shells are trailing that spend.

Over time, this could imply that a chunk of “planned” AI capacity won’t energize as quickly as their roadmaps assume.

So, AI revenues will still rise, but they may do so at a slower rate than the market currently expects, as they are limited in scaling AI capacity (e.g., cloud services).

That mismatch could trigger multiple compressions over the next 1–3 years (aka earnings up, but share prices lag as expectations reset).

With the added debt, the compression could be amplified, because interest payments leave less room for disappointment.

GPU vendors (Nvidia & others)

Yes, they will still sell every chip they produce in the short term (1yr +).

But at some point, customers will be sitting on orders they can’t energize (this concerns mainly frontier training clusters, not inference capacity).

We’ve already seen an early version of this with CoreWeave in Q3 2025.

They had to cut CapEx guidance because they didn’t have enough powered shells to install GPUs into (shares fell ~15% on the news).

If that pattern spreads across more GPU buyers, orders will eventually start getting paced to real energization timelines.

In that scenario, GPU vendor earnings still grow, but the market could re-rate the story, leading to multiple compressions similar to what hyperscalers might face.

But there is some nuance to this:

Nvidia’s multiple isn’t as stretched as many hyperscalers, so the risk may be smaller.

And Nvidia is leaning harder into inference (ie., the chips required in edge AI devices such as humanoids or robotaxis, eg., JETSON-type platforms as the brains for humanoids).

That could open a new S-curve and keep sentiment strong even if training demand is power-gated.

Now, let’s focus on the winners…

If the thesis is right, the beneficiaries should be:

Utility-scale solar and batteries near DCs clusters.

Grid hardware and transmission builders.

Battery storage integrators and renewables-heavy utilities.

Simply put: We want to own the picks-and-shovels for the AI energy rush.

Identifying single equity plays is always hard.

Yes, Tesla may be an attractive play as they scale their batteries business. But their story is influenced by many other business units, especially robotaxis and humanoids.

So, to cover the broader space, the following 2 plays emerge as attractive risk/reward opportunities:

1. Energy iron plays: Small cap stocks

Obviously, the big (gas turbine) OEMs had a run this year.

They are production constrained in the short term, but a big backlog can still turn into years of high margin earnings and pricing power.

But if you’re looking for more asymmetry, the upside may be in smaller names tied to:

Transformers and switchgear that unlocks new data center connections.

Transmission components (cables, towers).

Battery storage integrators near AI clusters.

These smaller players benefit from the same surge in orders, but with fewer investors already piled in.

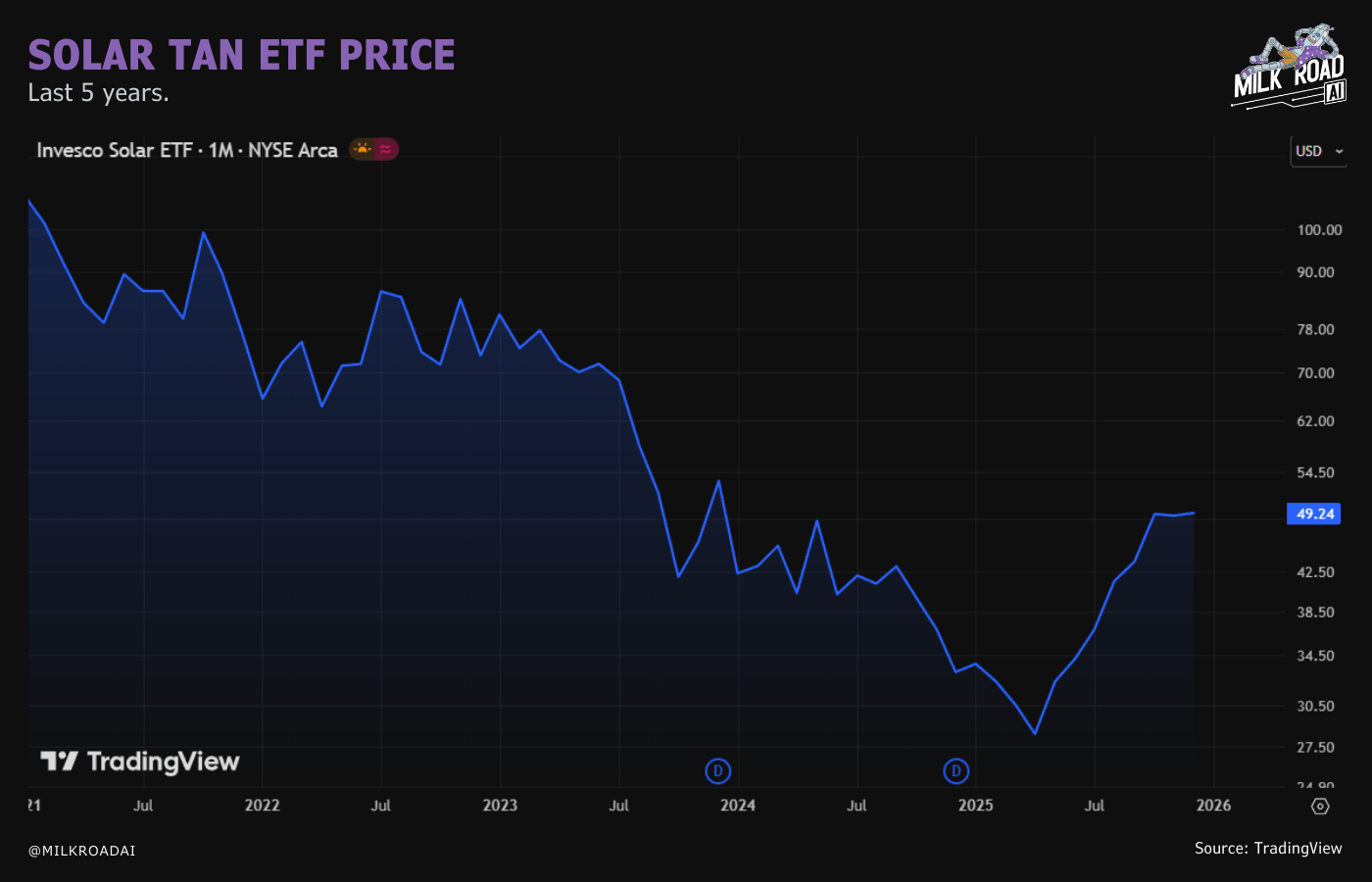

2. Energy stack play: Solar TAN ETF

The solar trade has been ugly the last few years.

The sector is down ~50% from its 2021 peak, after the Covid-era green stimulus and infinite installations narrative faded, especially in Europe.

But solar isn’t dead!

If anything, it now benefits from the Lindy effect.

The longer something survives, the longer it’s likely to keep surviving. Solar has been around for decades, and costs are down ~80–90% since 2010.

Now the AI energy crunch is screaming for fast, cheap power.

Solar is one of the few technologies that can actually deliver that in this decade, which makes it very likely to stick around and grow.

Invesco’s solar ETF, is still ~50% below its highs.

With the underlying tech proven and a new AI-driven demand story building, the downside looks limited while you keep upside to a re-rating over the next few years!

Bottom line: AI relies on scarce physical infrastructure and power

AI’s first bottleneck was chips. The next one is power & physical infrastructure.

Over the next few years, DCs turn into a new heavy industry, smashing into grids, transformers and interconnection queues that weren’t built for this much load.

The short term is all about workarounds, keeping old plants alive, rationing hookups, bending demand, and stuffing the system with batteries.

But the only combo that can really scale this decade and near AI clusters is solar + storage.

For investors, that means a great risk/reward play isn’t guessing the next silicon or model winner.

It’s owning the picks and shovels of the AI power rush: grid hardware, batteries, and solar.

Alright, that’s all I’ve got for you today, hope you found it valuable!

Respond to this email to let me know your thoughts on this first edition, and the new Milk Road PRO AI format in general!

I’m keen to hear what you think.

Catch you in the next one. 🫡